The preprocessing phase in machine learning is a crucial step where feature variables play a vital role in transforming values into a format that is comprehensible and effective for ML algorithms.

To facilitate this transformation, the sklearn.preprocesing module offers a set of encoders.

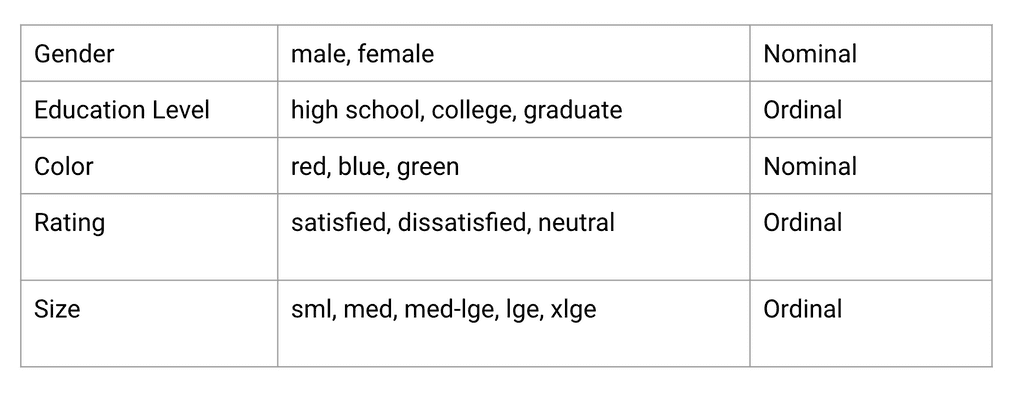

Understanding categorical variable

Categorical variables, also known as Ordinal or Nominal, are exemplified by the following instances:

The above variables represent categories found in real world dataset , machine learning algorithm requires a numerical input and this is where encoding comes into picture

Why need for encoding

ML model rely on mathematical equation to make prediction. To convert the variable values from text to numerical representation these conversion also to process and interpret the features to get more accurate result.

Load the sample dataset

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

full_pumpkin = pd.read_csv('./data/US-pumpkins.csv')

Types of available encoders

Label Encoders

- Transform categorical labels into numeric

- Use for ordinal categorical variables

In the above taken dataset, we will select Color feature and apply LabelEncoder

## select required columns

features = ['Color', 'Origin', 'Item Size', 'Variety', 'City Name', 'Package']

pumpkins = pumpkins_full.loc[:, features]

## drop NAN rows from column Color

pumpkins.dropna(subset=['Color'])

pumpkins['Color'].unique()array(['ORANGE', 'WHITE', 'STRIPED'], dtype=object)The feature consists of values such as ORANGE, WHITE, and STRIPED, representing ordinal categorical values.

After applying the LabelEncoder, the 'Color_Encoded' column will be created, and you can inspect the distribution of the encoded values using below code

## apply lable encoder

from sklearn.preprocessing import LabelEncoder

label_encoder = LabelEncoder()

encoded_label = label_encoder.fit_transform(pumpkins['Color'])

pumpkins['Color_Encoded'] = encoded_labelView the encoded values

pumpkins['Color_Encoded'].value_counts()0 916

2 213

1 12To examine the inverse mapping, allowing you to revert the encoded values back to their original form, you can use the inverse_transform function.

list(label_encoder.inverse_transform([0,1,2]))['ORANGE', 'STRIPED', 'WHITE']Ordinal Encoder

- Ordinal Encoder is another useful encoding method provided by

sklearn.preprocessingfor handling ordinal categorical variables. - Unlike Label Encoder, which is better suited for binary or two-class categoricals, the Ordinal Encoder can handle multi-class categoricals.

- The Ordinal Encoder transforms each categorical value into a whole number, from 0 up to the total number of categories.

- It is crucial to note that the order of the categories can affect the encoding, so it is best to specifically define the order of your categories when using this encoder.

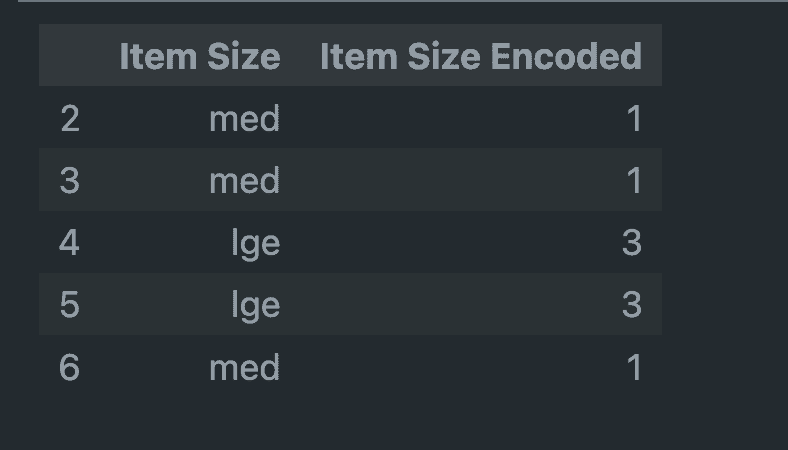

Here's a basic example of its usage:

from sklearn.preprocessing import OrdinalEncoder

item_size_categories = [['sml', 'med', 'med-lge', 'lge', 'xlge', 'jbo', 'exjbo']]

ordinal_features = ['Item Size']

ordinal_encoder = OrdinalEncoder(categories=item_size_categories, dtype=int)

## fit and transform

encoded_features = ordinal_encoder.fit_transform(pumpkins[ordinal_features])

## added to dataframe

pumpkins['Item Size Encoded'] = encoded_featuresIn the above code snippet, we first import the OrdinalEncoder from sklearn.preprocessing. We then define the order of our categories in the constructor of the OrdinalEncoder. After that, we fit and transform our 'Item Size' data using the OrdinalEncoder, and store the encoded values in a new column called 'Item Size Encoded'.

pumpkins[['Item Size', 'Item Size Encoded']].head()

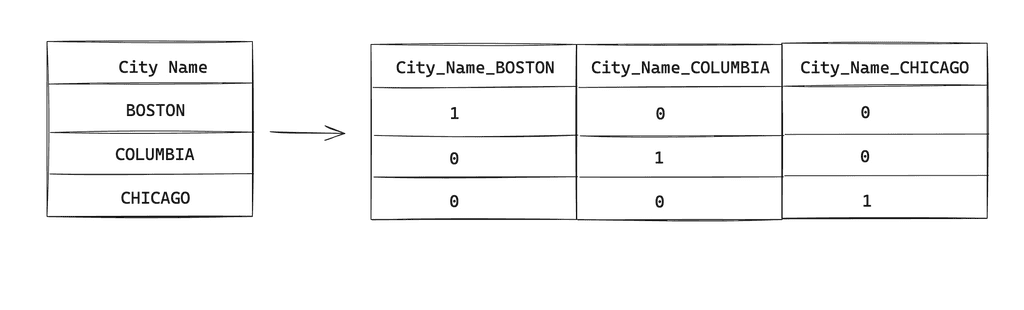

One Hot Encoder

One Hot Encoder is another encoding technique provided by sklearn.preprocessing. It is useful for handling nominal categorical variables. Unlike Label and Ordinal Encoders, which transform categorical values to a single column of numeric values, One Hot Encoder creates a separate column (binary) for each category in the feature.

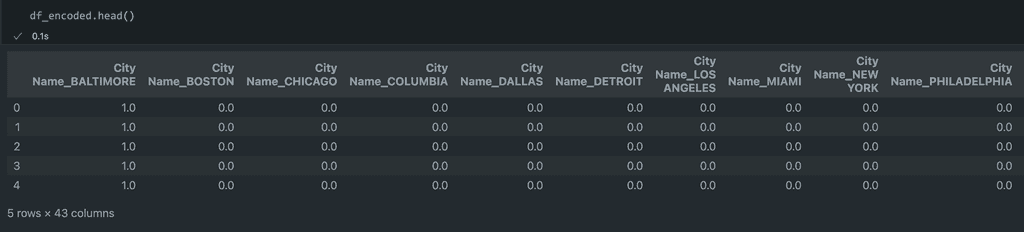

Entries such as BOSTON, COLOMBIA, and CHICAGO on column “City Name” will be transformed as follows:

from sklearn.preprocessing import OneHotEncoder

categorical_cols = ['City Name', 'Package', 'Variety', 'Origin']

onehot_encoder = OneHotEncoder(sparse=False, drop='first')

encoded_features = onehot_encoder.fit_transform(pumpkins[categorical_cols])

df_encoded = pd.DataFrame(encoded_features, columns=onehot_encoder.get_feature_names(categorical_cols))In the above code snippet, we first import the OneHotEncoder from sklearn.preprocessing. We then define the columns to be encoded. After that, we fit and transform our data using the OneHotEncoder. Since setting sparse=False, the output is an array. We also set drop='first' to avoid multicollinearity which can be a problem for certain ML algorithms.

Remember, the output of the One Hot Encoder is a NumPy array. If you want to convert it back to a DataFrame, you need to handle it appropriately.

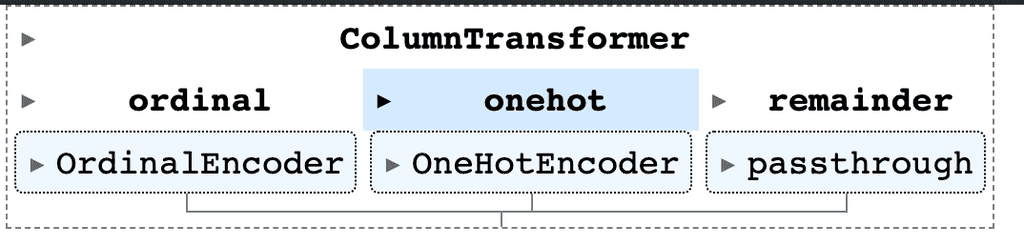

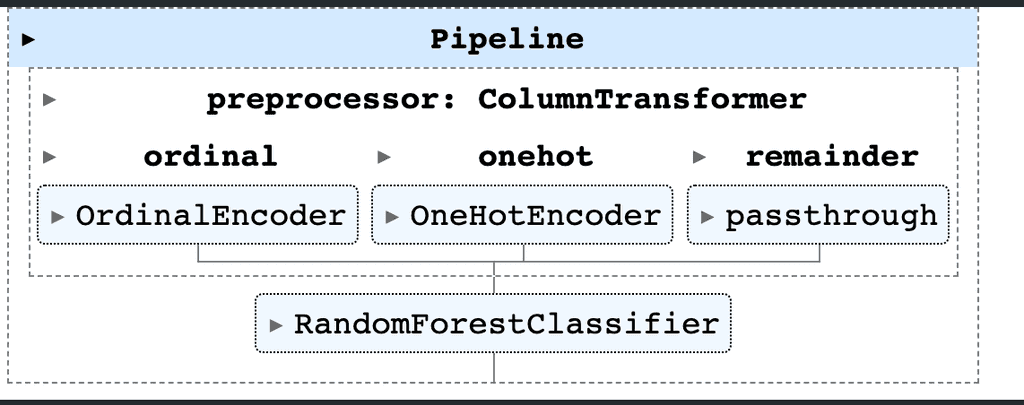

Column Transformer

Having covered the application of the encoder, we will now learn how to use column transformer using the same example.

In scenarios involving datasets comprising both numeric and categorical columns, where distinct preprocessing is required for each type, the ColumnTransformer can be employed for this purpose.

from sklearn.preprocessing import LabelEncoder,OneHotEncoder,OrdinalEncoder

from sklearn.compose import ColumnTransformer

from sklearn.pipeline import Pipeline

# Ordinal features

ordinal_features = ['Item Size']

ordinal_encoder = OrdinalEncoder(categories= [['sml', 'med', 'med-lge', 'lge', 'xlge', 'jbo', 'exjbo']])

# Categorical features

categorical_features = ['City Name', 'Package', 'Variety', 'Origin']

onehot_encoder = OneHotEncoder()

# Use column transformer to club multiple encoders

preprocessor = ColumnTransformer([

('ordinal', ordinal_encoder, ordinal_features),

('onehot', onehot_encoder, categorical_features)],

remainder='passthrough'

)

By using ColumnTransformer we can now effortlessly apply distinct encoders to various features within a single line of code.

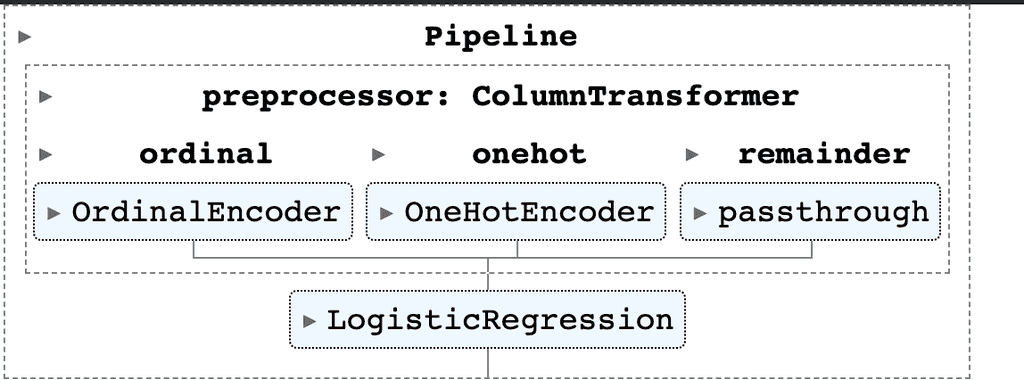

Applying Pipeline

In scikit-learn, a pipeline is a way to streamline a lot of the routine processes, particularly in the context of feature preprocessing and model building. It bundles together a sequence of data processing steps and a model into a single object.

The purpose of a pipeline is to assemble several steps that can be cross-validated together while setting different parameters. This ensures that all the preprocessing steps are applied consistently to both the training and testing datasets.

from sklearn.linear_model import LogisticRegression

# Now you have a full prediction pipeline

model = Pipeline(steps=[('preprocessor', preprocessor),

('classifier', LogisticRegression())])

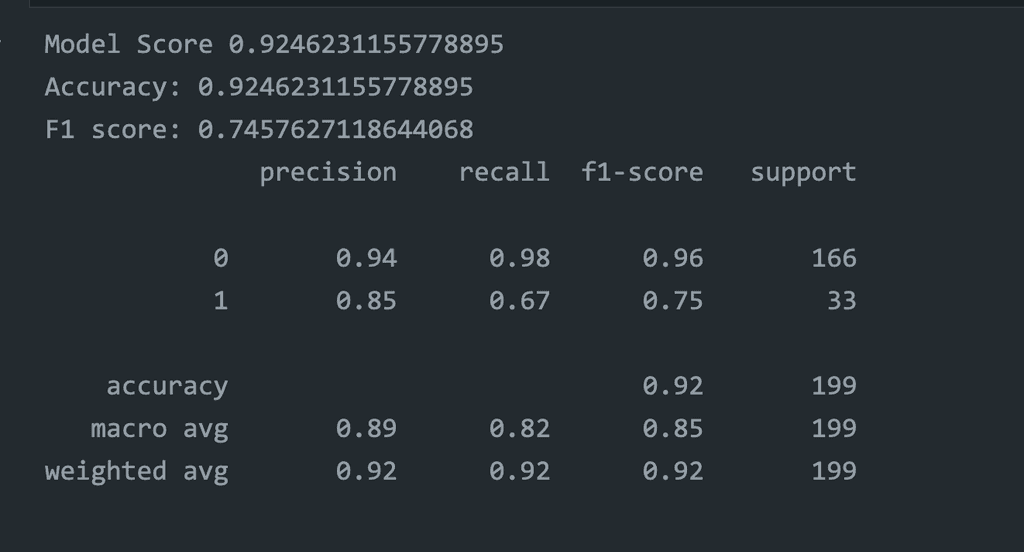

Model Training

- Logistic Regression

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score,f1_score,classification_report

X = pumpkins[['City Name', 'Package', 'Variety', 'Origin','Item Size']]

y = pumpkins['Color_Encoded']

X_train,X_test,y_train,y_test = train_test_split(X,y,test_size=0.2,random_state=0)

print("Model Score",model.score(X_test, y_test))

y_pred = model.predict(X_test)

print(f'Accuracy: {accuracy_score(y_test, y_pred)}')

print(f'F1 score: {f1_score(y_test, y_pred)}')

print(classification_report(y_test, y_pred))

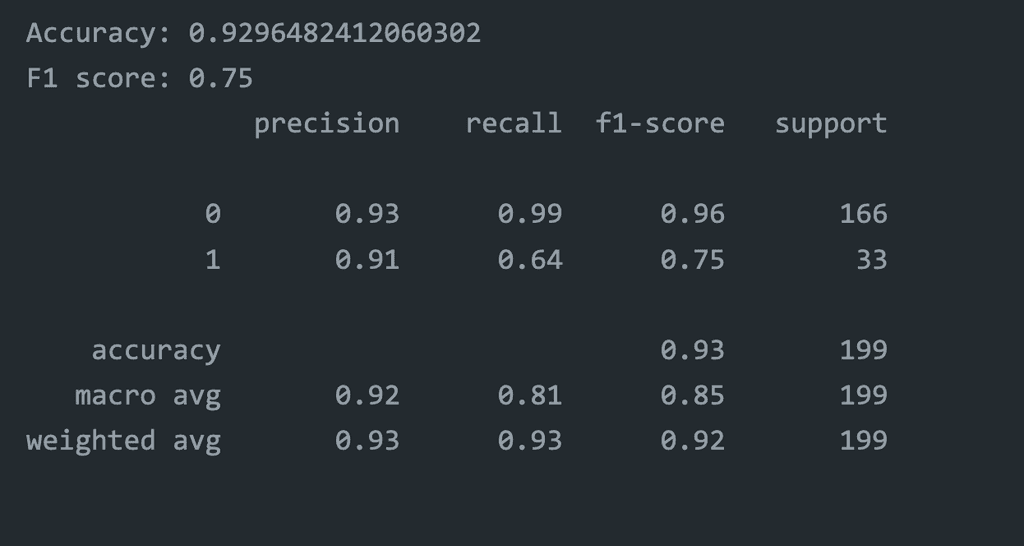

- Random Forest

from sklearn.ensemble import RandomForestClassifier

rf_model = Pipeline(

steps=[

("preprocessor", preprocessor),

(

"classifier",

RandomForestClassifier(n_estimators=1000, max_depth=5, random_state=45),

),

]

)

rf_model.fit(X_train, y_train)

rf_model.score(X_test, y_test)

y_pred_rf = rf_model.predict(X_test)

print(f'Accuracy: {accuracy_score(y_test, y_pred_rf)}')

print(f'F1 score: {f1_score(y_test, y_pred_rf)}')

print(classification_report(y_test, y_pred_rf))

Conclusion

In conclusion, sklearn provides a comprehensive set of tools for preprocessing data and building machine learning models. The use of encoders, transformer, and pipeline simplifies the process of handling categorical variables, applying distinct transformations to different columns, and chaining preprocessing steps with model building. This not only streamlines the process but also ensures consistency in applying these steps across training and testing datasets. With these tools, machine learning practitioners can focus more on improving the model's performance and less on the routine tasks.